State Machine

There are a many components required to achieve the melee/bullet-hell style of game-play that this project is focused on. From user-interface, leveling system, object pooling, game manager and etc, though none of that holds a candle to role played by the artificial intelligence. Without the artificial intelligence, there wouldn’t be much of a game. I’m going to break down the design of my stack finite state machine (FSM) to offer others some insight into my approach. Feel free to critique.

Reflex Agent

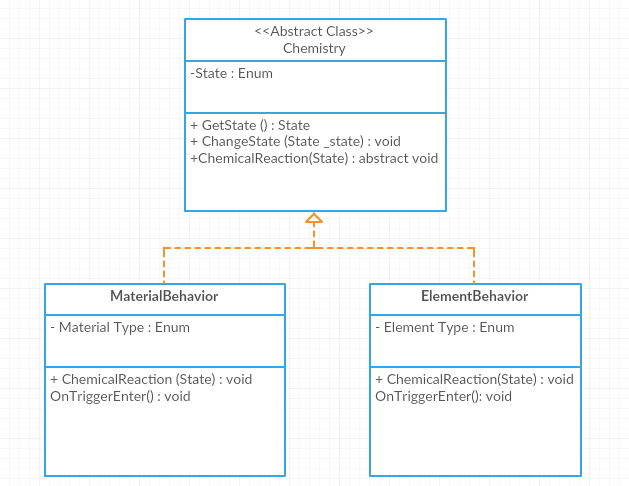

I’ve created a reflex agent for use in this project, it was the best choice for this project as most decisions made by the agent are related to the players actions. The term agent refers to the AI program or machine. A reflex agent is reactionary, changing state based on some given information.

Finite State Machine

A finite–state machine (FSM) or finite–state automaton (FSA, plural: automata), finite automaton, or simply a state machine, is a mathematical model of computation. It is an abstract machine that can be in exactly one of a finite number of states at any given time. This is a wiki definition, that I believe explains it perfectly.

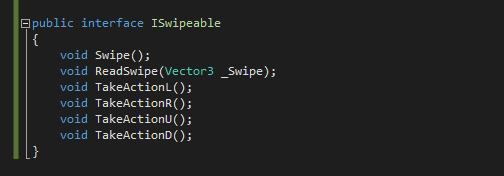

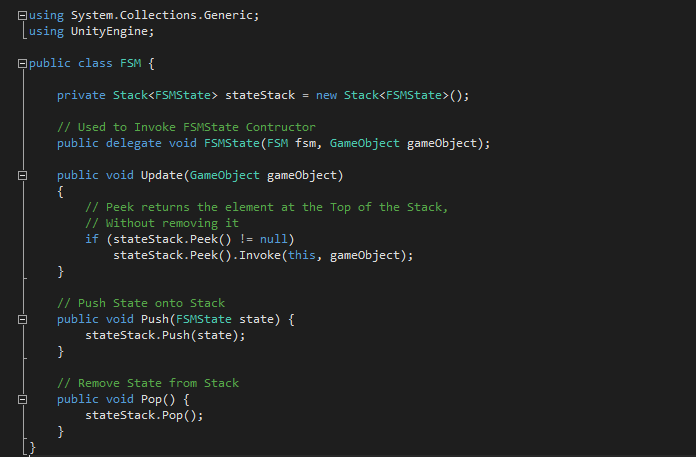

Stack Finite State Machine

Using a stack to manage a state machine has tremendous benefits, allowing for states that are more easily managed and modified. A Stack is a container adapter, representing the last-in-first-out(LIFO) non-generic collection of objects. Container adapters are classes that use an encapsulated object of a specific container class as it’s underlying container, providing a specific set of member functions to access it’s elements. Here is a little snippet of the class responsible for managing the AI stacks:

Link to video: Combat